At its re:Invent conference on Tuesday, Amazon Web Services (AWS), Amazon’s cloud computing division, announced a new family of generative AI, multimodal models called Nova.

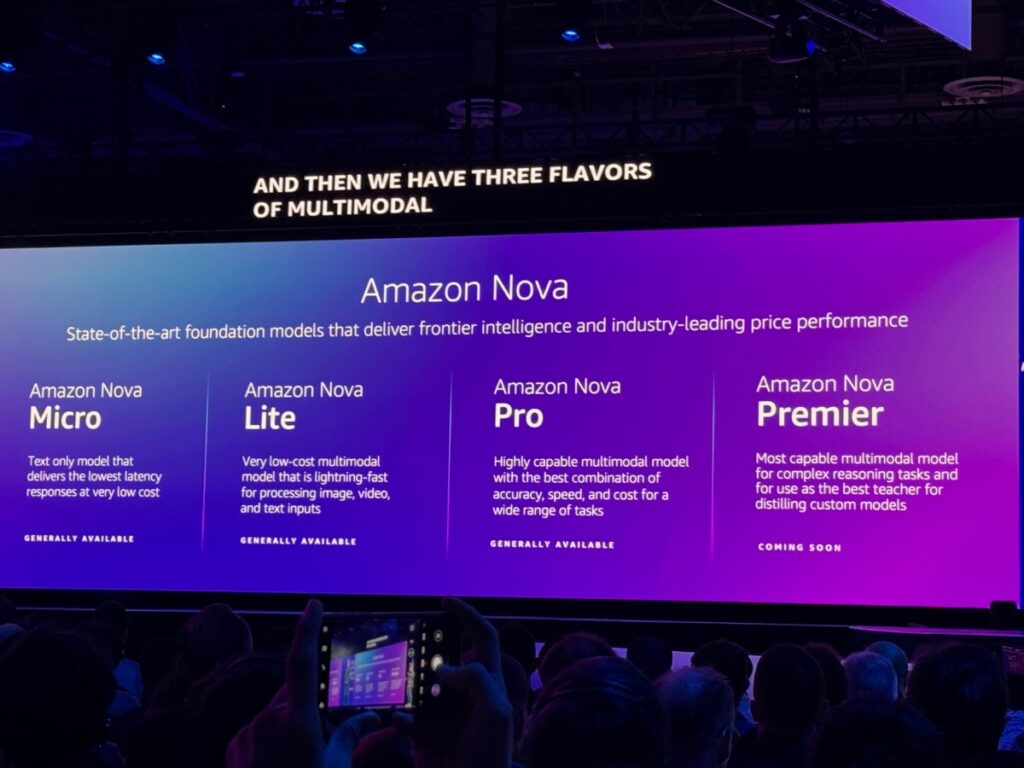

There’s four text-generating models in total: Micro, Lite, Pro, and Premier. Micro, Lite, and Pro are available today for AWS customers, while Premier will arrive in early 2025, Amazon CEO Andy Jassy said onstage.

In addition to those, there’s an image generation model, Nova Canas, and a video-generating model, Nova Reel. Both also launched on AWS this morning.

“We’ve continued to work on our own frontier models,” Jassy said, “and those frontier models have made a tremendous amount of progress over the last four to five months. And we figured, if we were finding value out of them, you would probably find value out of them.”

Micro, Lite, Pro, and Premier

The text-generating Nova models, which are optimized for 15 languages (primarily English), are differentiated by their capabilities and sizes, mainly.

Micro, which can only take in text and output text, delivers the lowest latency of the bunch — processing text and generating responses the fastest. Lite can process image, video, and text inputs reasonably quickly. Pro offers the best combination of accuracy, speed, and cost for a range of tasks. And Premier is the most capable, designed for complex workloads.

Pro and Premier, like Lite, can analyze text, images, and video. All three are well-suited for tasks like digesting documents and summarizing charts, meetings, and diagrams. AWS is positioning Premier, however, as more of a “teacher” model for creating tuned custom models, rather than a model to be used on its own.

Micro has a 128,000-token context window, meaning it can process up to around 100,000 words. Lite and Pro have 300,000-token context windows, which works out to around 225,000 words, 15,000 lines of computer code, or 30 minutes of footage.

In early 2025, certain Nova models’ context windows will expand to support over 2 million tokens, AWS says.

Jassy claims the Nova models are among the fastest in their class — and among the least expensive to run. They’re available in AWS Bedrock, Amazon’s AI development platform, where they can be fine-tuned on text, images, and video and distilled for improved speed and higher efficiency.

“We’ve optimized these models to work with proprietary systems and APIs, so that you can do multiple orchestrated automatic steps — agent behavior — much more easily with these models,” Jassy added. “So I think these are very compelling.”

Canas and Reel

Canvas and Reel are AWS’ strongest play yet for generative media.

Canvas lets users generate and edit images using prompts (for example, to remove backgrounds), and provides controls for the generated images’ color schemes and layouts. Reel, the more ambitious of the two models, creates videos up to six seconds in length from prompts and optionally reference images. Using Reel, users can adjust the camera motion to generate videos with pans, 360-degree rotations, and zoom.

Reel’s currently limited to six-second videos (which take ~3 minutes to generate), but a version that can create two-minute-long videos is “coming soon,” according to AWS.

Here’s a sample:

And another:

And here’s images from Canvas:

Jassy stressed that both Canvas and Reel have “built-in” controls for responsible use, including watermarking and content moderation. “[We’re trying] to limit the generation of harmful content,” he said.

AWS expanded on the safeguards in a blog post, saying that Nova “extends [its] safety measures to combat the spread of misinformation, child sexual abuse material, and chemical, biological, radiological, or nuclear risks.” It’s unclear what this means in practice, however.

AWS also continues to remain vague about which data, exactly, it uses to train all its generative models. The company previously told TechCrunch only that it’s a combination of proprietary and licensed data.

Few vendors readily reveal such information. They see training data as a competitive advantage and thus keep it — and info relating to it — a closely guarded secret. Training data details are also a potential source of IP-related lawsuits, another disincentive to reveal much.

In lieu of transparency, AWS offers an indemnification policy that covers customers in the event one of its models regurgitates (i.e. spits out a mirror copy of) a potentially copyrighted still.

So, what’s next for Nova? Jassy says that AWS is working on a speech-to-speech model — a model that’ll take speech in and output a transformed version of it — for Q1 2025, and an “any-to-any” model for around mid-2025.

The speech-to-speech model will also be able to interpret verbal and nonverbal cues, like tone and cadence, and deliver natural, “human-like” interactions, Amazon says. As for the any-to-any model, it’ll theoretical power applications from translators to content editors to AI assistants.

“You’ll be able to input text, speech, images, or video and output text, speech, images, or video,” Jassy said of the any-to-any model. “This is the future of how frontier models are going to be built and consumed.”