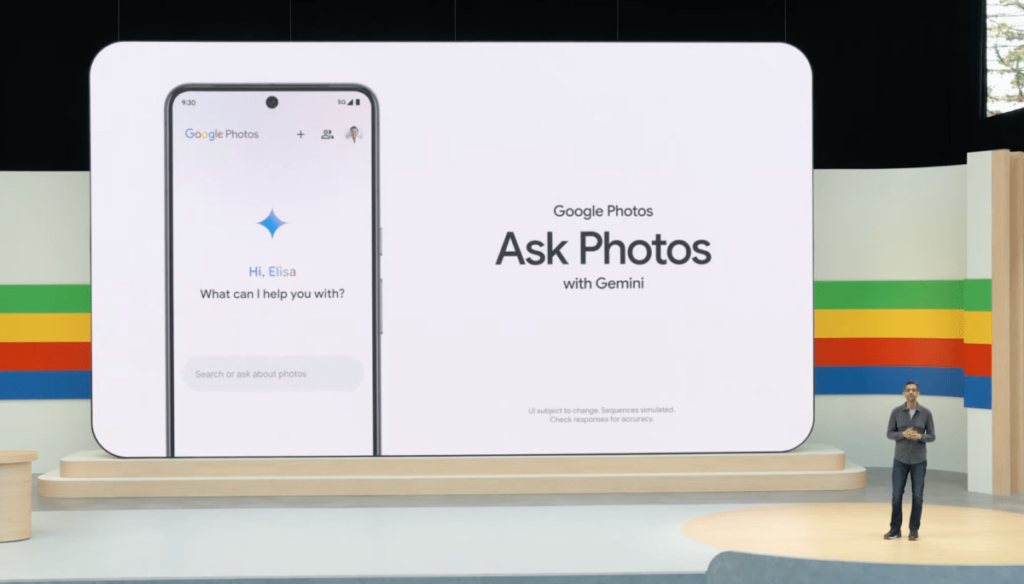

Google Photos is getting an AI infusion with the launch of an experimental feature, Ask Photos, powered by Google’s Gemini AI model. The new addition, which rolls out later this summer, will allow users to search across their Google Photos collection using natural language queries that leverage an AI’s understanding of their photo’s content and other metadata.

While before users could search for specific people, places, or things in their photos, thanks to natural language processing, the AI upgrade will make finding the right content more intuitive and less of a manual search process, Google announced Tuesday at its annual Google I/O 2024 developer conference.

For instance, instead of searching for something specific in your photos, such as “Eiffel Tower,” you can now ask the AI to do something much more complex, like find the “best photo from each of the National Parks I visited.” The AI uses a variety of signals to determine what makes the photo the “best” of a given set, including things like lighting, blurriness, lack of background distortion, and more. It can then combine that with its understanding of the geolocation of a set of photos or dates to retrieve only those images taken at U.S. National Parks.

This feature builds on the recent launch of Photo Stacks in Google Photos, which groups together near-duplicate photos and uses AI to highlight the best photos in the group. As with Photo Stacks, the aim is to help people find the photos they want as their digital collections grow. More than 6 billion images are uploaded daily to Google Photos, according to Google, to give you an idea of scale.

In addition, the “Ask Photos” feature will allow users to ask questions to get other sorts of helpful answers. Beyond asking for the best photos from a vacation or some other group, users can ask questions that require an almost human-like understanding of what’s in their photos.

For instance, a parent could ask Google Photos what themes they had used for their child’s four last birthday parties, and it could return a simple answer along with photos and videos about the mermaid, princess, and unicorn themes that were previously used and when.

This type of query is made possible because Google Photos doesn’t just understand the keywords you’ve entered but also the natural language concepts, like “themed birthday party.” It can also take advantage of the AI’s multimodal abilities to understand if there’s text in a photo that may be relevant to the query.

Another example demoed to the press by CEO Sundar Pichai ahead of today’s Google I/O developer conference showed a user asking the AI to show them their child’s swimming progress. The AI packaged up highlights of photos and videos of the child swimming over time.

Another new feature taps into using search to find answers from text in the photos. That way, you could snap a photo of something you wanted to remember later on — like your license plate or passport number — and then ask the AI to retrieve that information when you needed it.

If the AI ever gets things wrong and you correct it — perhaps flagging a photo that’s not from a birthday party or one you wouldn’t highlight from your vacation — it will remember that response to improve over time. This also means the AI becomes more personalized to you the longer you interact with it.

When you find photos you’re ready to share, the AI can help draft a caption that summarizes the content of the photos. For now, this is a basic summary, which doesn’t offer the option of choosing from different styles, however. (But considering it’s using Gemini under the hood, a smartly written prompt might work to return a certain style if you try it.)

Google says it will have guardrails in place to not respond in certain cases (perhaps no asking the AI for the “best nudes”?). It also didn’t include potentially offensive content when training the model. But the feature is launching as an experiment, so it may need additional controls to be added over time as Google responds to how people put it to use.

The Ask Photos feature will initially be supported in the U.S. in English before rolling out to more markets. It will also only be a text-based feature for now, similar to asking questions of an AI chatbot. Over time, though, it could become integrated more deeply with Gemini running on the device, as on Android.

The company says users’ personal data in Google Photos is not used for ads. Humans also won’t review AI conversations and personal data in Ask Photos, except “in rare cases to address abuse or harm,” Google says. People’s personal data in Google Photos also isn’t used to train any other generative AI product, like Gemini.