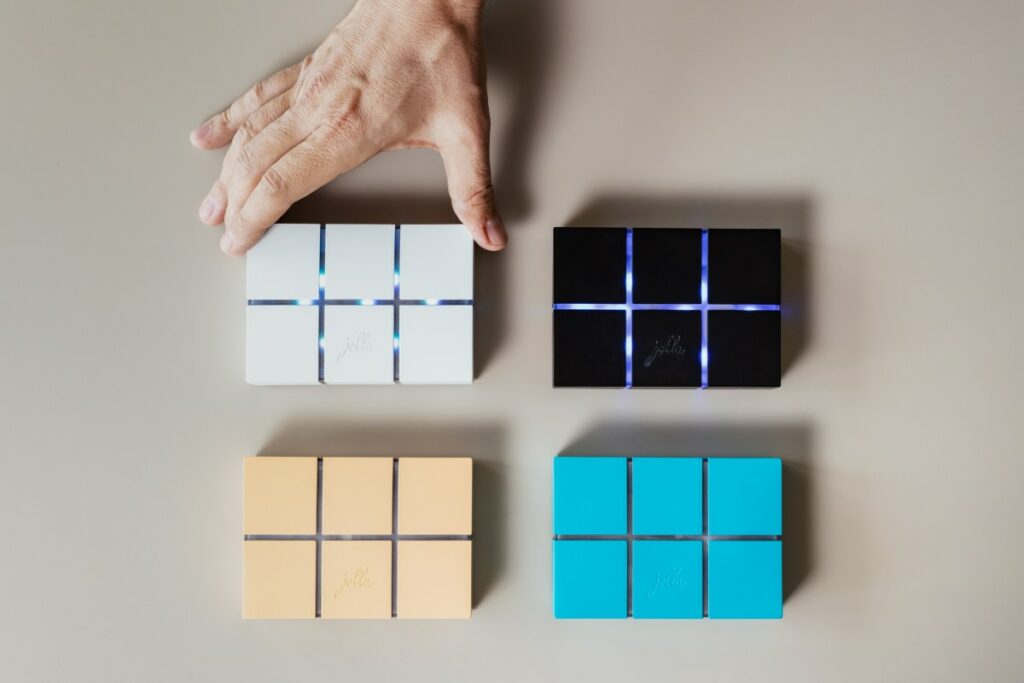

Jolla has taken the official wraps off the first version of its personal server-based AI assistant in the making. The reborn startup is building a privacy-focused AI device — aka the Jolla Mind2, which TechCrunch exclusively revealed at MWC back in February.

At a livestreamed launch event Monday, it also kicked off preorders, with the first units slated to ship later this year in Europe. Global preorders open in June, with plans to ship later this year or early next.

In the two+ months since we saw the first 3D-printed prototype of Jolla’s AI-in-a-box, considerable hype has swirled around other consumer-focused AI devices, such as Humane’s Ai Pin and the Rabbit R1. But early interest has deflated in the face of poor or unfinished user experiences — and a sense that nascent AI gadgets are heavy on experimentation, light on utility.

The European startup behind Jolla Mind2 is keen for its AI device not to fall into this trap, per CEO and co-founder Antti Saarnio. That’s why they’re moving “carefully” — trying to avoid the pitfall of overpromising and under delivering.

“I’m sure that this is one of the biggest disruptive moments for AI — integrating into our software. It’s massive disruption. But the first approaches were rushed, basically, and that was the problem,” he told TechCrunch. “You should introduce software which is actually working.”

The feedback is tough, but fair in light of recent launches.

Saarnio says the team is planning to ship a first few hundreds of units (up to 500) of the device to early adopters in Europe this fall — likely tapping into the community of enthusiasts it built up around earlier products such as its Sailfish mobile OS.

Pricing for the Jolla Mind2 will be €699 (including VAT) — so the hardware is considerably more expensive than the team had originally planned. But there’s also more on-board RAM (16GB) and storage (1TB) than they first budgeted for. Less good: Users will have to shell out for a monthly subscription starting at €9.99. So this is another AI device that’s not going to be cheap.

AI agents living in a box

The Jolla Mind2 houses a series of AI agents tuned for various productivity-focused use cases. They’re designed to integrate with relevant third-party services (via APIs) so they can execute different functions for you — such as an email agent that can triage your inbox, and compose and send messages. Or a contacts agent which Jolla briefly demoed at MWC that can be a repository of intel about people you interact with to keep you on top of your professional network.

In a video call with TechCrunch ahead of Monday’s official launch, Saarnio demoed the latest version of Jolla Mind2 — showing off a few features we hadn’t seen before, including the aforementioned email agent; a document preview and summarizing feature; an e-signing capability for documents; and something new it’s calling “knowledge bases” (more below).

The productivity-focused features we saw being demoed were working, although there was some notable latency issues. An apologetic Saarnio said demo gremlins had struck earlier in the day, causing the last-minute performance issues.

Switching between agents was also manual in the demo of the chatbot interface but he said this would be automated through the AI’s semantic understanding of user queries for the final product.

Planned AI agents include: a calendar agent; storage agent; task management; message agent (to integrate with third-party messaging apps); and a “coach agent”, which they intend to tap into third-party activity/health tracking apps and devices to let the user query their quantified health data on device.

The promise of private, on-device processing is the main selling point for the product. Jolla insists user queries and data remains securely on the hardware in their possession. Rather than — for example if you use OpenAI’s ChatGPT — your personal info being sucked up into the cloud for commercial data mining and someone else’s profit opportunity…

Privacy sounds great but clearly latency will need to be reduced to a minimum. That’s doubly important, given the productivity and convenience ‘prosumer’ use-case Jolla is also shooting for, alongside its core strategic focus on firewalling your personal data.

The core pitch is that the device’s on-board circa 3BN parameter AI model (which Saarnio refers to as a “small language model”) can be hooked up to all sorts of third-party data sources. That makes the user’s information available for further processing and extensible utility, without them having to worry about the safety or integrity of their info being compromised as they tap into the power of AI.

For queries where the Jolla Mind2‘s local AI model might not suffice, the system will provide users with the option of sending queries ‘off world’ — to third-party large language models (LLMs) — while making them aware that doing so means they’re sending their data outside the safe and private space. Jolla is toying with some form of color-coding for messages to signify the level of data privacy that applies (e.g. blue for full on-device safety; red for yikes your data is exposed to a commercial AI so all privacy bets are off).

Saarnio confirmed performance will be front of mind for the team as they work on finessing the product. “It’s basically the old rule that if you want to make a breakthrough it has to be five times better than the existing solutions,” he said.

Security will also absolutely need to be a priority. The hardware will do things like set up a private VPN connection so the user’s mobile device or computer can securely communicate with the device. Saarnio added that there will be an encrypted cloud-based back-up of user data that’s stored on the box in case of hardware failure or loss.

Which zero knowledge encryption architecture they choose to ensure no external access to the data is possible will be an important consideration for privacy-conscious users. Those details are still being figured out.

AI hardware with a purpose?

One big criticism that’s been leveled at early AI devices like Humane’s Ai Pin and the Rabbit R1 takes the form of an awkward question: Couldn’t this just be an app? Given, y’know, everyone is already packing a smartphone.

It’s not an attack line that obviously applies to the Jolla Mind2. For one thing the box housing the AI is intended to be static, not mobile. Kept somewhere safe at home or the office. So you won’t be carrying two chunks of hardware around most of the time. Indeed, your mobile (or desktop computer) is the usual tool for interacting with Jolla Mind2 — via a chatbot-style conversational interface.

The other big argument Saarnio makes to justify Jolla Mind2 as a device is that trying to run a personal server-style approach to AI processing in the cloud would be hard — or really expensive — to scale.

“I think it would become very difficult to scale cloud infrastructure if you would have to run local LLM for every user separately. It would have to have a cloud service running all the time. Because starting it again might take like, five minutes, so you can’t really use it in that way,” he argued. “You could have some kind of a solution which you download to your desktop, for example, but then you can use it with your smartphone. Also, if you want to have a multi-device environment, I think this kind of personal server is the only solution.”

The aforementioned knowledge base is another type of AI agent feature that will let the user instruct the device to connect to curated repositories of information to further extend utility.

Saarnio demoed an example of a curated info dump about deforestation in Africa. Once a knowledge base has been ingested onto the device it’s there for the user to query — extending the model’s ability to support them in understanding more about a given topic.

“The user [could say] ‘hey, I want to learn about African deforestation’,” he explained. “Then the AI agent says we have one provider here [who has] created an external knowledge base about this. Would you like to connect to it? And then you can start chatting with this knowledge base. You can also ask it to make a summary or document/report about it or so on.

“This is one of the big things we are thinking — that we need to have graded information in the internet,” he added. “So you could have a thought leader or a professor from some area like climate science create a knowledge base — upload all the relevant research papers — and then the user… could have some kind of trust that somebody has graded this information.”

If Jolla can make this fly it could be pretty smart. LLMs tend to not only fabricate information but present concocted nonsense as if it’s the absolute truth. So how can web users surfing an increasing AI-generated internet content landscape be sure what they’re being exposed to is bona fide information?

The startup’s answer to this fast-scaling knowledge crisis is to let users point their own on-device AI model at their preferred source/s of truth. It’s a pleasingly human-agency-centric fix to Big AI’s truth problem. Small AI models plus smartly curated data sources could also offer a more environmentally friendly type of GenAI tool than Big AI is offering, with its energy draining, compute and data heavy approach.

Of course, Jolla will need useful knowledge bases to be compiled for this feature to work. It envisages these being curated — and rated — by users and the wider community it hopes will get behind its approach. Saarnio reckons it’s not a big ask. Domain experts will easily be able to collate and share useful research repositories, he suggests.

Jolla Mind2 spotlights another issue: How much tech users’ experience of software is typically very far outside their control. User interfaces are routinely designed to be intentionally distracting/attention-hogging or even outright manipulative. So another selling point for the product is about helping people reclaim their agency from all the dark patterns, sludge, notifications etc., etc. — whatever really annoys you about all the apps you have to use. You can ask the AI to cut through the noise on your behalf.

Saarnio says the AI model will be able to filter third-party content. For example, a user could ask to be shown only AI-related posts from their X feed, and not have to be exposed to anything else. This sums to an on-demand superpower to shape what you are and aren’t ingesting digitally.

“The whole idea [is] to create a peaceful digital working environment,” he added.

Saarnio knows better than most how tricky is it to convince people to buy novel devices, given Jolla’s long backstory as an alternative smartphone maker. Unsurprisingly, then, the team is also plotting a B2B licensing play.

This is where the startup sees the biggest potential to scale uptake of their AI device, he says — positing they could have a path to selling “hundreds of thousands” or even millions of devices via partners. Jolla community sales, he concedes, aren’t likely to exceed a few tens of thousands at most, matching the limited scale of their dedicated, enthusiast fan-base.

The AI component of the product is being developed under another (new) business entity, called Venho AI. As well as being responsible for the software brains powering the Jolla Mind2, this company will act as a licensing supplier to other businesses wanting to offer their own brand versions of the personal-server-cum-AI-assistant concept.

Saarnio suggests telcos could be one potential target customer for licensing the AI model — given these infrastructure operators once again look set to miss out on the digital spoils as tech giants pivot to baking generative AI into their platforms.

But, first things first. Jolla/Venho needs to ship a solid AI product.

“We must mature the software first, and test and build it with the community — and then, after the summer, we’ll start discussing with distribution partners,” he added.