It’ll still be a while before quantum computers become powerful enough to do anything useful, but it’s increasingly likely that we will see full-scale, error-corrected quantum computers become operational within the next five to 10 years. That’ll be great for scientists trying to solve hard computational problems in chemistry and material science, but also for those trying to break the most common encryption schemes used today. That’s because the mathematics of the RSA algorithm that, for example, keep the internet connection to your bank safe, are almost impossible to break with even the most powerful traditional computer. It would take decades to find the right key. But these same encryption algorithms are almost trivially easy for a quantum computer to break.

This has given rise to post-quantum cryptography algorithms and on Tuesday, the U.S. National Institute of Standards and Technology (NIST) published the first set of standards for post-quantum cryptography: ML-KEM (originally known as CRYSTALS-Kyber), ML-DSA (previously known as CRYSTALS-Dilithium) and SLH-DSA (initially submitted as SPHINCS+). And for many companies, this also means that now is the time to start implementing these algorithms.

The ML-KEM algorithm is somewhat similar to the kind of public-private encryption methods used today to establish a secure channel between two servers, for example. At its core, it uses a lattice system (and purposely generated errors) that researchers say will be very hard to solve even for a quantum computer. ML-DSA, on the other hand, uses a somewhat similar scheme to generate its keys, but is all about creating and verifying digital signatures; SLH-DSA is also all about creating digital signatures but is based on a different mathematical foundation to do so.

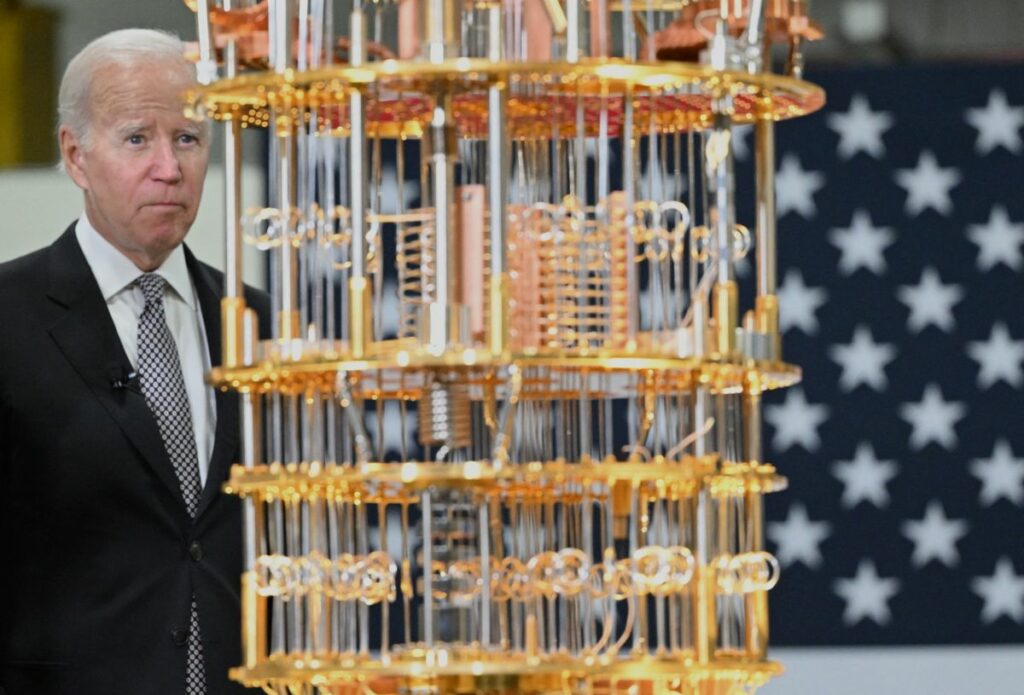

Two of these algorithms (ML-KEM and ML-DSA) originated at IBM, which has long been a leader in building quantum computers. To learn a bit more about why we need these standards now, I spoke to Dario Gil, the director of research at IBM. He thinks that we will hit a major inflection point around the end of the decade, which is when IBM expects to build a fully error-corrected system (that is, one that can run for extended periods without the system breaking down and becoming unusable).

“Then the question is, from that point on, how many years until you have systems capable of [breaking RSA]? That’s open for debate, but suffice to say, we’re now in the window where you’re starting to say: all right, so somewhere between the end of the decade and 2035 the latest — in that window — that is going to be possible. You’re not violating laws of physics and so on,” he explained.

Gil argues that now is the time for businesses to start considering the implications of what cryptography will look like once RSA is broken. A patient adversary could, after all, start gathering encrypted data now and then, in 10 years, use a powerful quantum computer to break that encryption. But he also noted that few businesses — and maybe even government institutions — are aware of this.

“I would say the degree of understanding of the problem, let alone the degree of doing something about the problem, is tiny. It’s like almost nobody. I mean, I’m exaggerating a little bit, but we’re basically in the infancy of it,” he said.

One excuse for this, he said, is that there weren’t any standards yet, which is why the new standards announced Tuesday are so important (and the process for getting to a standard, it’s worth noting, started in 2016).

Even though many CISOs are aware of the problem, Gil said, the urgency to do something about it is low. That’s also because for the longest time, quantum computing became one of those technologies that, like fusion reactors, was always five years out from becoming a reality. After a decade or two of that, it became somewhat of a running joke. “That’s one uncertainty that people put on the table,” Gil said. “The second one is: OK, in addition to that, what is it that we should do? Is there clarity in the community that these are the right implementations? Those two things are factors, and everybody’s busy. Everybody has limited budgets, so they say: ‘Let’s move that to the right. Let’s punt it.’ The task of institutions and society to migrate from current protocols to the new protocol is going to take, conservatively, decades. It’s a massive undertaking.”

It’s now up to the industry to start implementing these new algorithms. “The math was difficult to create, the substitution ought not to be difficult,” Gil said about the challenge ahead, but he also acknowledged that that’s easier said than done.

Indeed, a lot of businesses may not even have a full inventory of where they are using cryptography today. Gil suggested that what’s needed here is something akin to a “cryptographic bill of materials,” similar to the software bill of materials (SBOM) that most development teams now generate to ensure that they know which packages and libraries they use in building their software.

Like with so many things quantum, it feels like now is a good time to prepare for its arrival — be that learning how to program these machines or how to safeguard your data from them. And, as always, you have about five years to get ready.