ChatGPT hallucinated about music app Soundslice so often, the founder made the lie come true

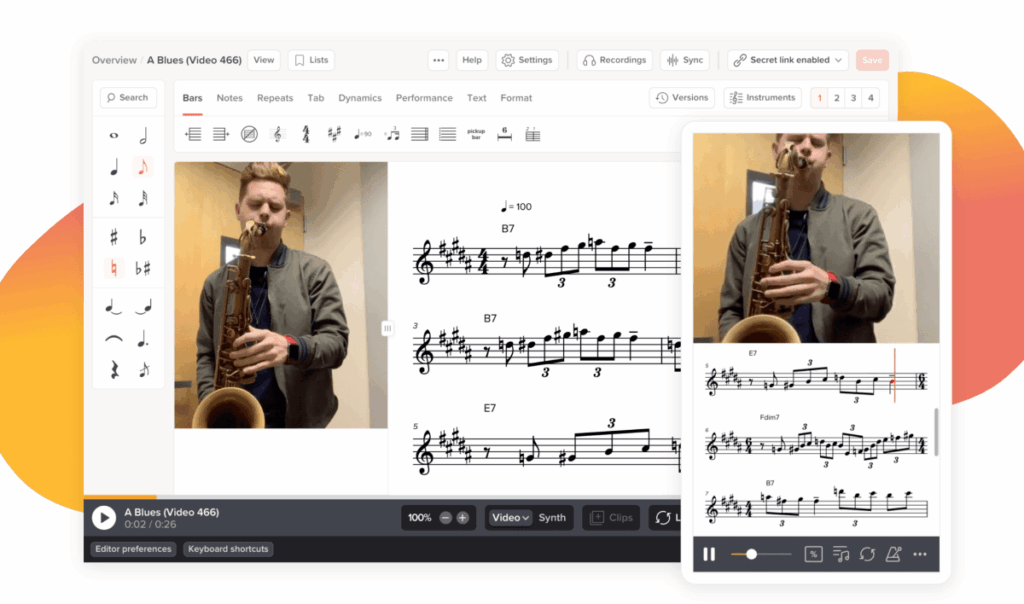

Earlier this month, Adrian Holovaty, founder of music-teaching platform Soundslice, solved a mystery that had been plaguing him for weeks. Weird images of what were clearly ChatGPT sessions kept being uploaded to the site. Once he solved it, he realized that ChatGPT had become one of his company’s greatest hype men – but it was […]